New tools for verifying CESM simulation output

This article is part of a CISL News series describing the many ways CISL improves modeling beyond providing supercomputing systems and facilities. These articles briefly describe CISL modeling projects and how they benefit the research community.

Large simulation codes, such as the Community Earth System Model (CESM), are especially complex and continually evolving: features are regularly added, improvements are made, and software and hardware environments change. Given the near-constant state of CESM development and the widespread use of CESM by scientists around the world studying the climate system, software quality assurance for CESM is critical to preserve the quality of the code and instill confidence in the model. Detecting potential errors in CESM has historically been a cumbersome and subjective process, but NCAR now has a new tool that quickly assesses changes in its output.

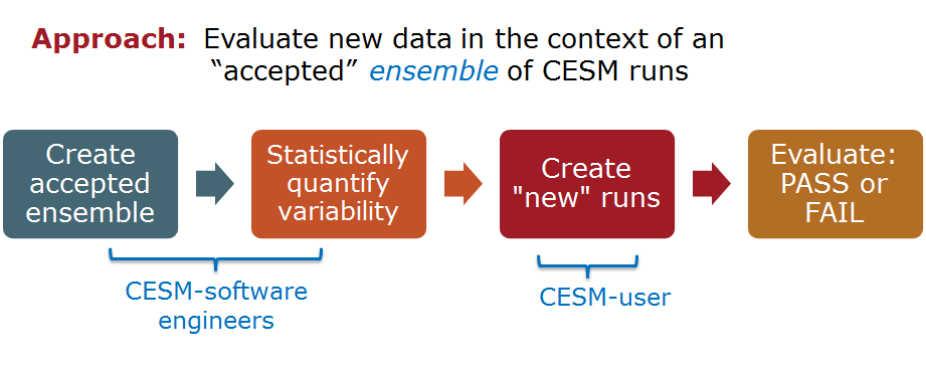

Under the exact same simulation environment, re-running the CESM model produces identical simulation outputs, and code verification is trivial because the “answer” has not changed. However, for almost all practical situations that include changes in the CESM software or hardware environment (e.g., a new compiler version, code optimization, or a different supercomputer), the new simulation output is not identical to the previous output. For those cases, researchers must be certain that an error has not been introduced. The question then becomes whether the new result is “correct” in some sense. For example, while it’s easy to imagine how a compiler upgrade could rearrange code and produce non-identical simulation output, scientists must ensure that a compiler upgrade does not have a “climate-changing” effect on the new simulation output. Because a simple metric or definition for “climate-changing” does not exist, CISL and NCAR collaborators developed the CESM Ensemble Consistency Test (ECT) tool to evaluate the statistical consistency of simulation outputs.

Specifically, CESM-ECT determines whether the output of a new simulation is statistically distinguishable from an ensemble of control simulations. An ensemble is a collection of multiple simulations, typically based on subtly different initial conditions. Because the dynamics of the atmosphere and the ocean models are chaotic, slight perturbations in initial conditions result in different (non-identical) outcomes of the model system. Ensembles representing possible outcomes are common tools in weather forecasting and climate modeling as they indicate the amount of variability (and uncertainty) in the model’s predictions. From an ensemble of control simulations run on an accepted software/hardware stack, CESM-ECT determines a statistical distribution that is then used as a metric to evaluate whether a new run is statistically distinguishable from the control ensemble.

The CESM-ECT tool quantitatively represents some of the natural variability in the Earth’s climate system using an ensemble of model runs that are used as a baseline for “accepted” variability. This tool provides end-users with a complete software suite that is easy to use, automated, and computationally very efficient.

“The CESM-ECT tool offers an efficient and easy-to-use test that is accessible to both CESM code developers and CESM users,” said Allison Baker, project scientist in CISL’s Application Scalability and Performance group. “CESM-ECT provides an objective way to determine whether the difference in a simulation output may be attributable to climate system variability or indicate a potential problem with the code itself or the machine it’s run on.”

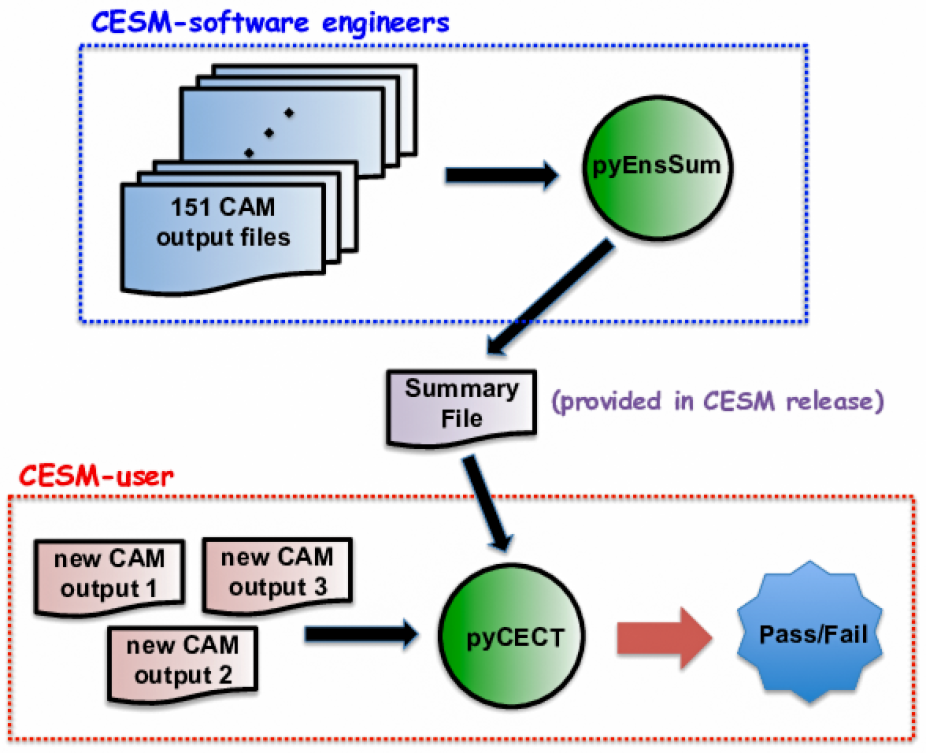

CESM is composed of multiple coupled models that run simultaneously and exchange data to produce a climate prediction. CESM-ECT has module-specific support for the CESM atmosphere component model – the Community Atmosphere Model (CAM) – and the ocean component model. In addition, recent experiments suggest that errors in the land component, the Community Land Model (CLM), are detectable by CESM-ECT via CAM output data due to the tight coupling between CAM and CLM. The most recent addition to the CESM-ECT toolbox is an inexpensive “ultra-fast” tool that evaluates statistical consistency with an ensemble of simulations of only several time steps in length. The CESM-ECT suite of tools is publicly available in NCAR’s github repository.

The ability of CESM-ECT to quickly assess changes in simulation output is a significant step forward in the pursuit of more quantitative metrics for the climate modeling community. Further, by providing rapid feedback to model developers, this tool has made it easier to evaluate new code optimizations and hardware and software upgrades. The CESM-ECT tool is currently being used by NCAR’s CESM software engineering group to verify ports of CESM to new computers and evaluate new CESM software release tags, and it has already proven its utility in detecting multiple errors in software and hardware environments.

This diagram shows CESM-ECT software tools that summarize an ensemble of test runs and supply that information to evaluate a new version of the model. This software helps both developers and users.

These citations list previous research publications about this work:

Daniel J. Milroy, Allison H. Baker, Dorit M. Hammerling, and Elizabeth R. Jessup, 2017: “Nine time steps: ultra-fast statistical consistency testing of the Community Earth System Model (pyCECT v3.0),” Geoscientific Model Development Discussions. In review. (DOI: 10.5194/gmd-2017-49).

Daniel J. Milroy, Allison H. Baker, Dorit M. Hammerling, John M. Dennis, Sheri A. Mickelson, and Elizabeth R. Jessup, 2016: “Towards characterizing the variability of statistically consistent Community Earth System Model simulations.” Procedia Computer Science (ICCS 2016), Vol. 80, pp. 1589-1600 (DOI 10.1016/j.procs.2016.05.489).

Allison H. Baker, Y. Hu, D.M. Hammerling, Y. Tseng, X. Hu, X. Huang, F.O. Bryan, and G. Yang, 2016: “Evaluating Statistical Consistency in the Ocean Model Component of the Community Earth System Model (pyCECT v2.0).” Geoscientific Model Development, Vol. 9, pp. 2391-2406 (DOI: 10.5194/gmd-9-2391-2016).

Allison H. Baker, D.M. Hammerling, M.N. Levy, H. Xu, J.M. Dennis, B.E. Eaton, J. Edwards, C. Hannay, S.A. Mickelson, R.B. Neale, D. Nychka, J. Shollenberger, J. Tribbia, M. Vertenstein, and D. Williamson, 2015: “A new ensemble-based consistency test for the community earth system model (pyCECT v1.0).” Geoscientific Model Development, Vol. 8, pp. 2829–2840 (DOI: 10.5194/gmd-8-2829-2015).